Smart Tech, Dumb Decisions: : The Invisible Threat of AI-Powered Wearables in Secure Spaces

What if I told you I didn’t need a lab, a grant, or a research team to build a live surveillance and intelligence-gathering platform? All I needed was a few lines of code, a pair of smart glasses (or a webcam), access to a handful of public APIs — and an old laptop.

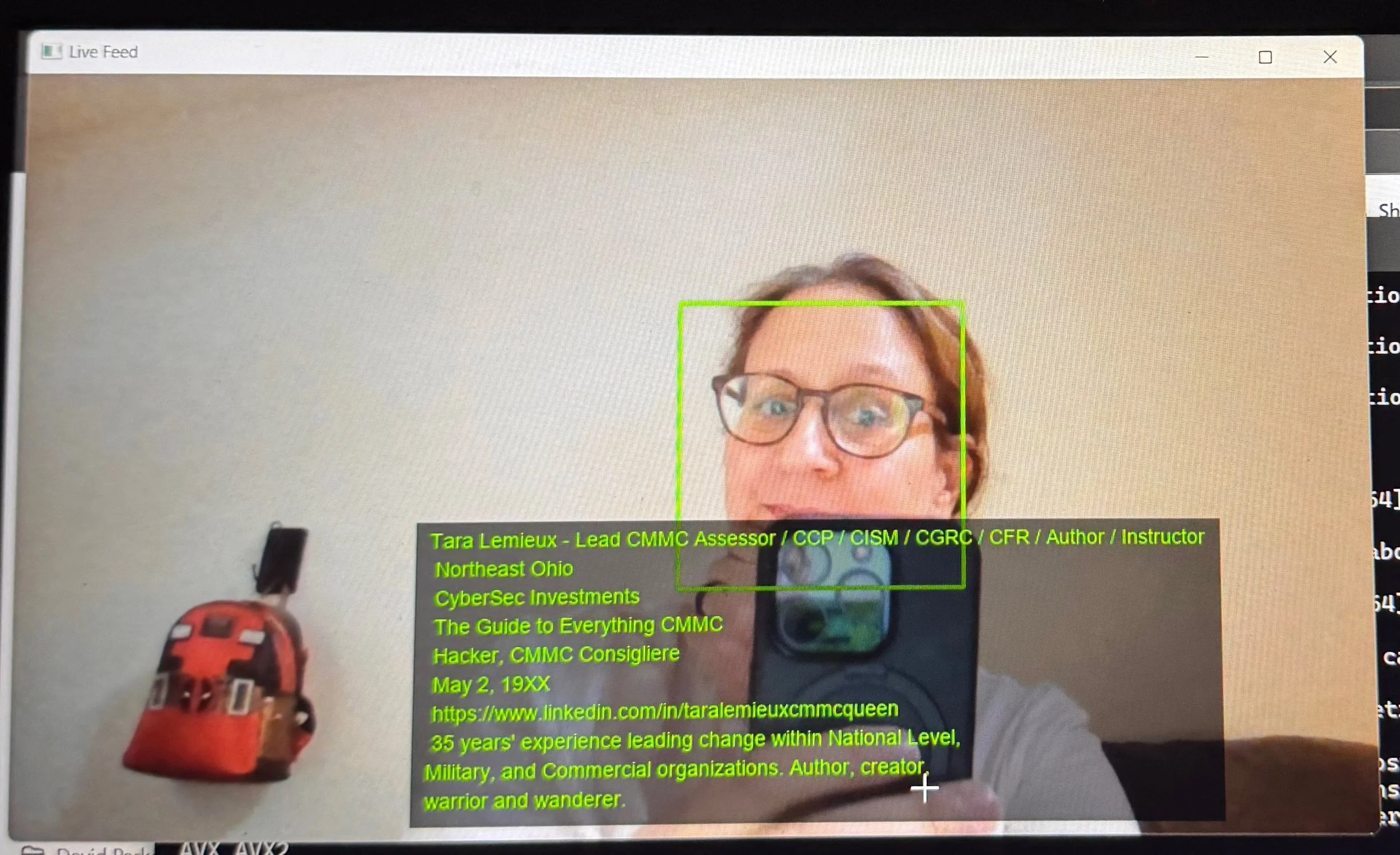

And with that, I can identify faces in real time, enrich them with open-source intelligence, geotag their location, and monitor their physical movements — all in seconds. No special hardware. No backdoor access. Just determination, an open internet, and consumer-grade AI.

Here’s how it works:

A face appears in the camera’s field of view — mine, yours, your director of R&D. Within seconds, it’s matched. Not just with a name, but with LinkedIn profiles, affiliations, career history, agency ties, publications, and skills.

FIgure 1: AI wearable tech promises convenience but also poses serious risks. This is just one single frame from a video my smart glasses captured. Our personal data can be easily exploited, leaving us vulnerable to privacy breaches and manipulation. Stay informed, be cautious.

All of that metadata gets pulled, parsed, and presented in a translucent hover box. If you’re wearing smart glasses, it appears right in front of you — projected over the real-world person you’re looking at.

Right now, for testing, I’ve limited the enrichment to LinkedIn. But let’s be honest: this engine can integrate with nearly any API — property records, business filings, GitHub activity, social media, credential leaks, and public purchase records. In effect, a face becomes a search key — and the entire internet becomes the query.

But recognition is only the beginning.

Every time the camera sees that face again — across a hallway, across a city — it logs it. No prompts. No alerts. Just quiet observation. It geotags the interaction. Captures new behavior. Detects changes — a different hairstyle, a new badge, a companion. Ultimately building a pattern of life. Where someone goes. Who they’re near. When they appear. What they carry.

Over time, it builds a dossier that no human could maintain. Not unless they were watching you, 24/7.

And, yes - all of this data can be further enhanced.

The system evaluates visible traits: tattoos, glasses, ID badges, uniforms, even gait and body metrics. If it sees the same tattoo again — even under a sleeve — it knows. If someone walks the same way, wears the same badge, carries the same gear, it knows. Agency logos? Matched. Sensitive material? Flagged. It doesn’t need to see the face anymore — it already knows what it’s looking for.

This wasn’t built in a hardened lab or funded by DARPA. It was written in my living room. On my own time. On a machine that crashes twice a week.

And it works — shockingly well.

So what are the risks? And how could this be exploited by those with more nefarious intentions?

In the wrong hands, this capability becomes a powerful surveillance weapon. Patterns of life can be reconstructed with stunning precision — revealing daily routines, affiliations, sensitive meetings, and even off-duty habits of cleared personnel.

It enables adversaries to map networks, anticipate movements, and identify vulnerabilities without ever breaching a system. With enough passive collection, even those operating under deep cover or within secure environments can be exposed.

At scale, this shifts the balance of power — away from hardened facilities and toward whoever controls the data stream. In that world, visibility isn't just a liability; it's an exploit waiting to be used.

Consider just a few very real-world scenarios:

An insider equipped with AI-powered wearables walks the halls of a defense contractor facility, silently identifying employees. LinkedIn profiles, public bios, and social media posts are scraped and overlaid in real time — names, titles, affiliations, geotags, even property records appearing mid-stride.

A lab technician passes by with a prototype in hand. The system captures the badge, the object, the face. It tags the frame, timestamps the event, and begins constructing a project timeline — hallway by hallway, frame by frame.

A law enforcement officer, senior agency official, or high-value target is spotted in public — not in uniform, no badge in sight. But something gives them away: the way they walk, a visible tattoo, a familiar bag. The system logs it, enriches it, and quietly updates the profile.

Perhaps it could be used to identify a journalist, a whistleblower, a dissident — anyone — through the curve of a cheekbone, a forgotten breadcrumb, or a shared node in the social graph. It doesn’t need much. One confirmed match is enough to unravel the rest. From there, the data flows — enriched in real time, quietly compiled, and optionally stored for whoever needs it next.

Ghost profiles form from presence alone — subtle patterns in movement, location, proximity, and timing. Each person becomes a breadcrumb. Each room, a layer of context. Even a glance can become a data point. Quietly and relentlessly, the system constructs a detailed portrait of cleared personnel — without a single word exchanged or credential offered.

The Regulatory and Operational Risk You’re Not Tracking

From a compliance and legal standpoint, this technology introduces a silent, escalating threat — one most organizations aren't prepared to manage.

If facial recognition is operating inside your facility — whether authorized, shadow-deployed, or simply overlooked — you could already be in violation of critical regulatory frameworks, including:

CUI handling mandates under NIST SP 800-171 and CMMC

PII and PHI protections governed by HIPAA, GDPR, or state privacy laws

Workplace surveillance laws that require employee disclosure under labor codes

ITAR and export control restrictions, particularly if biometric patterns relate to controlled technologies

Third-party risk requirements, especially when contractors, partners, or visitors act as collection vectors

Here’s the part that should keep you up at night: none of this requires a breach. No firewall is tripped. No credentials are stolen. This is ambient surveillance — passive, deniable, and almost always invisible to your current security stack.

While your SIEM hunts for lateral movement and your SOC flags zero-days, a consumer-grade lens may already be scanning faces, logging behaviors, and building pattern-of-life dossiers — right inside your walls.

Governance frameworks aren’t built to handle this. Your insider threat program probably isn’t calibrated for it.

And the most alarming part?

I built this system on a beat-up laptop, in my living room — watching a Paul Reubens documentary. No team. No budget. Just time, curiosity, and a few lines of code.

Imagine what a nation-state could do.

Because this works — shockingly well. It watches…it learns…it remembers.

Every face it sees becomes a thread in a larger pattern — a name, a role, a digital history pulled from publicly available data and stitched together into something terrifyingly complete.

No alerts. No red flags. Just quiet observation — a slow accumulation of details most people don’t realize they’re leaving behind. The system identifies who you are, where you’ve been, who you’re near, and what you’re doing. It builds context, it draws connections, it maps your behavior — all without you ever knowing.

Now imagine that capability walking into your facility — your test lab, your war room. Not deployed by a foreign adversary with a drone or zero-day exploit, but worn casually by an employee, a contractor, or a visitor.

No one would ever need to point a camera, or say a word – because the system is always on — silently capturing, geotagging, and learning from everything it sees. Every glance becomes data. Every moment, another clue.

And by the time you realize it was there… it’s already gone.

This isn’t hypothetical, it’s not five years out – it’s here today. Powered by nothing more than curiosity — and some python code.

And if I could build it?

Someone already has. Someone more resourced and less ethical. Someone who doesn’t want you to know they’re watching.

No sign-in sheet will catch it, nor will your neatly written policy manual matter once it walks through your front door wearing a smile — and a lens.

Because the next breach won’t be a file transfer or a database dump. It’ll be a glance. A nod. A walk down the hallway. You won’t hear it. You won’t see it. But it will see you.

And when it does, it won’t just recognize who you are —it will begin to build a detailed composite of your background, your skill sets, your affiliations… along with everything else you probably never intended to share: your routines, your habits, your vulnerabilities.

Not just gathering information —but helping to produce meaningful intelligence.